Challenges

The challenges have concluded. We thank all participants and welcome all to participate in next year’s challenges!

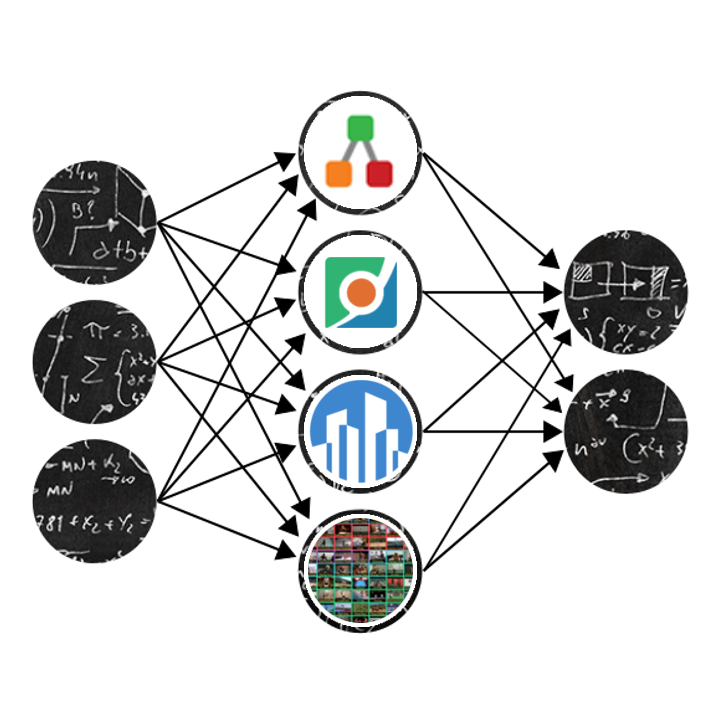

We present the “Visual Inductive Priors for Data-Efficient Computer Vision” challenges. We offer four challenges, where models are to be trained from scratch, and we reduce the number of training samples to a fraction of the full set. The winners of each challenge are invited to present their winning method at the VIPriors workshop presentation at ECCV 2020. The four data-deficient challenges are:

- Image classification on ImageNet

- Semantic segmentation on Cityscapes

- Object detection on MS COCO

- Action recognition on UFC-101

These tasks were chosen to encourage researchers of arbitrary background to participate: no giant GPU clusters are needed, nor will training for a long time yield much improvement over the baseline results.

Final rankings

Listed below are the final rankings for each challenge, after reviewing all submissions for which a technical report or paper was received. We congratulate the winners! For more details on the final rankings, please visit the correspondig CodaLab competition for each challenge.

Image Classification

-

Pengfei Sun, Xuan Jin, Wei Su, Yuan He, Hui Xue, Quan Lu. Alibaba Group

- (Shared) Zhipeng Luo, Ge Li, Zhiguang Zhang. DeepBlue Technology (Shanghai) Co., Ltd

(Shared) Zhao Bingchen, Wen Xin. Megvii Research Nanjing, Tongji University

- Byeongjo Kim, Chanran Kim, Jaehoon Lee, Jein Song, Gyoungsoo Park. Zuminternet

- Samsung-SLSI-MSL-SS (aka CodaLab user Ben1365)

Semantic Segmentation

- Chen Weitao, Wang Zhibing. Alibaba Group

- Qingfeng Liu, Behnam Babagholami Mohamadabadi, Mostafa El-Khamy, Jungwon Lee. Samsung-SLSI-MSL-SS

- Chih-Chung Hsu, Hsin-Ti Ma. National Pingtung University of Science and Technology

- V. Buğra Yeşilkaynak, Yusuf H. Sahin; G. Unal. Istanbul Technical University, Computer Engineering

- Rafal Pytel, Tomasz Motyka. Delft University of Technology

Object Detection

- Fei Shen, Xin He, Mengwan Wei, Yi Xie. Huaqiao University, Wuhan University Of Technology

- Yinzheng Gu, Yihan Pan, Shizhe Chen. Jilian Technology Group

- Zhipeng Luo, Lixuan Che. DeepBlue Technology (Shanghai) Co., Ltd

Action Recognition

- Ishan Dave, Kali Carter, Mubarak Shah. Center for Research in Computer Vision (CRCV), University of Central Florida, LeTourneau University

- Haoyu Chen, Zitong Yu, Xin Liu, Wei Peng, Yoon Lee, Guoying Zhao. University of Oulu, Delft University of Technology

- Zhipeng Luo, Dawei Xu, Zhiguang Zhang. DeepBlue Technology (Shanghai) Co., Ltd

- Taeoh Kim, Hyeongmin Lee, MyeongAh Cho, Ho Seong Lee, Dong Heon Cho, Sangyoun Lee. Yonsei University, Cognex Deep Learning Lab

Important dates

Challenges open: March 11, 2020Challenges close: July 10, 2020Technical reports due: July 17, 2020Winners announced: July 24, 2020

The challenge has been completed! Please see the final rankings above.

Data

As training data for these challenges we use subsets of publicly available datasets. We do not directly provide the data but instead expose tooling to generate the subsets from the canonical versions of the publicly available full datasets through our toolkit. Please refer to Resources for details.

Rules

- We prohibit the use of other data than the provided training data, i.e., no pre-training, no transfer learning.

- For submissions on CodaLab to qualify to the challenge we require the authors submit either a technical report or a full paper about their final submission. See details below under “Report”. Submissions without a report or paper associated do not qualify to the competition.

- Top contenders in the challenge may be required to submit their submissions to peer review to ensure reproducability and that the rules of the challenge were followed. The organizers will contact contenders for this when necessary after the challenges close.

- Organizers retain the right to disqualify any submissions that violate these rules.

- The winners of each of the four challenges will get an opportunity to present their method at the VIPriors workshop at ECCV 2020. The organizers will contact contenders that are eligible for this opportunity after the challenges close.

Resources

To accommodate submissions to the challenges we provide a toolkit that contains

- Python tools for generating the appropriate training and validation data;

- documentation of the required submission format for the challenges;

- implementations of the baseline models for each challenge.

See the GitHub repository of the toolkit here.

Questions

If you have any questions, please first check the Frequently Asked Questions in the toolkit repository. If your question persists, you can ask it on the forums of the specific challenge on the CodaLab website. If you need to ask us a question in private, you can email us at vipriors-ewi AT tudelft DOT nl.